Nvidia operates under a cultural paradox. The company maintains a motto of being "always 30 days away from going out of business" despite commanding around 90% of the AI accelerator market and generating $130.5 billion in 2025 revenue. This survival mentality drives an aggressive annual product cadence that has transformed Nvidia from a 1990s graphics chip designer into the company powering virtually every major AI breakthrough.

The numbers tell a dramatic story. Data center revenue jumped from $15 billion to $115 billion in just two years. And Nvidia forecasts selling $500 billion worth of Blackwell and Rubin GPUs by the end of 2026.

In this analysis, we'll unpack how Nvidia built an economic moat through the CUDA ecosystem, why its margins exceed those of most software companies, and how the company is positioning itself as the primary operator of "AI Superfactories."

Table of Contents

How Nvidia works

Nvidia was founded in 1993 by Jensen Huang, Chris Malachowsky, and Curtis Priem. The company invented the GPU in 1999, originally to render video game graphics. Today, it operates on a philosophy of "accelerated computing" that challenges sixty years of CPU dominance.

The company's core mission addresses a fundamental shift in computing. AI researchers began training large language models requiring trillions of simultaneous mathematical operations. Nvidia's answer: parallel processing that decomposes massive computational tasks into millions of smaller calculations executed simultaneously across thousands of specialized cores.

Nvidia offers full-stack infrastructure including GPUs, CPUs (Grace), networking hardware (Mellanox), and software (CUDA). Its integrated systems solve the entire accelerated computing challenge.

But the hardware is only part of the story. CUDA, launched in 2006, is Nvidia’s software platform for programming GPUs. It lets developers use familiar languages like C++ and Python to run general-purpose code on Nvidia chips. Over time, CUDA became the default layer for GPU computing. Most modern AI frameworks are built on top of it.

The 2020 acquisition of Mellanox for $7 billion is another vital milestone. Mellanox builds high-performance networking gear that connects GPUs inside servers and across data centers. The deal turned Nvidia from a chip supplier into a full-stack infrastructure vendor.

That shift matters because modern AI systems are limited less by chip speed and more by how thousands of chips communicate. Nvidia now controls that layer. It sells NVLink for fast connections inside racks, InfiniBand for low-latency clusters, and Spectrum-X Ethernet for shared environments. Better networking raises overall system efficiency by double-digit percentages. At hyperscale, those gains translate into billions of dollars of usable compute.

Machines that TSMC uses to produce chips

Nvidia operates as a fabless semiconductor company, designing chips in-house but outsourcing manufacturing to foundries like TSMC. This model allows focus on architecture and software while partners handle production.

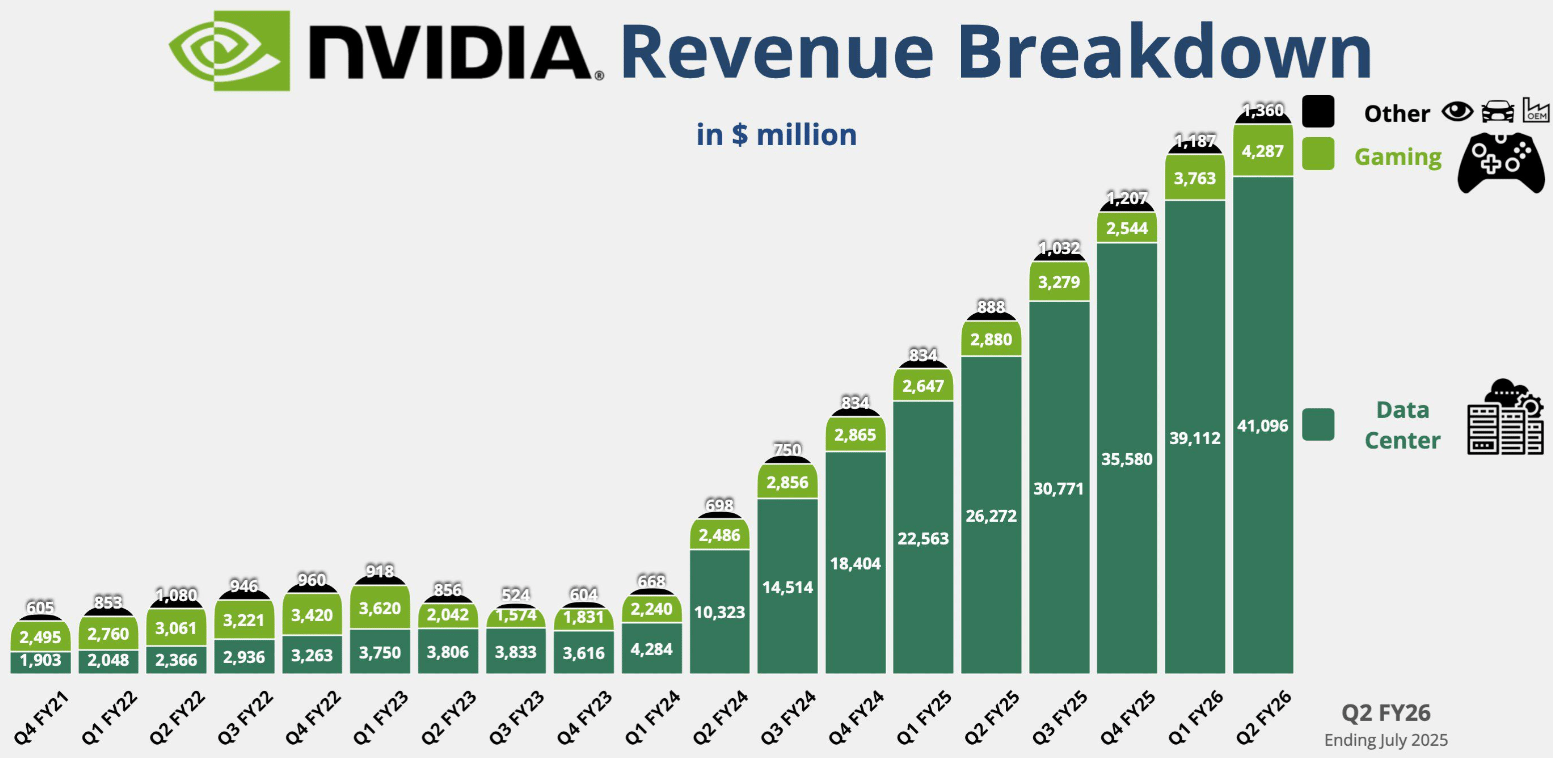

Nvidia’s revenue streams

Nvidia's financial profile has undergone a dramatic transformation. Total annual revenue reached $130.5 billion in FY2025, up 114% year-over-year. This follows $60.9 billion in FY2024 and $26.97 billion in FY2023. The company has shifted from a balanced revenue mix to one dominated almost entirely by enterprise data center infrastructure.

Gross margins reached approximately 75% in FY2025, with an operating margin of 62%—unprecedented for the semiconductor industry. Return on invested capital hit 28.69%, reflecting exceptional capital efficiency.

Data centers (accelerated computing & AI)

Data centers brought in $115.19 billion in FY2025, representing 88.27% of total revenue and 142.37% year-over-year growth. This segment includes A100/H100/B200 GPU accelerators, DGX systems, networking hardware (InfiniBand), software licenses (NVIDIA AI Enterprise), and DGX Cloud subscriptions.

Nvidia’s revenue growth is driven by data centers

Roughly 45% of revenue comes from hyperscale cloud providers: AWS, Google Cloud, Microsoft Azure, and Oracle. The remaining 55% comes from enterprises and sovereign AI initiatives.

Pricing power is extreme. H100 GPUs command $20,000-30,000+ per unit. All data center GPUs have been sold out in recent quarters. Gross margins exceed 70% in this segment.

Sovereign AI is emerging as a major trend, too. Nations building national AI infrastructure are on track to generate $20 billion in 2025, more than double the previous year. This revenue is considered highly "sticky"—once a nation invests in proprietary infrastructure, they're likely to continue upgrading and expanding within the same ecosystem.

Gaming (GeForce graphics)

Gaming generated $11.35 billion in FY2025, contributing 8.70% of total revenue with 8.64% year-over-year growth. Products include GeForce RTX graphics cards for PC gaming and GeForce NOW cloud gaming subscription service.

GeForce NOW enables players to play high-end games without a powerful comptuer

Nvidia maintains 92% share of the discrete GPU market and 84-94% of the add-in graphics card market. This dominance persists despite AMD's attempts to compete on price and memory capacity.

Gaming functions as an R&D "flywheel." Innovations developed for consumer graphics migrate to data center applications. The segment funds massive research investments that benefit the entire company.

Supply constraints limited Q4 FY2025 gaming shipments. Nvidia prioritized AI chip production at TSMC, leaving gaming demand partially unmet despite strong consumer interest. Data center chips command higher margins and serve a market with seemingly unlimited demand.

Professional visualization (workstation graphics)

Professional visualization, a 3D rendering and simulation product line, brought in $1.88 billion in FY2025, representing 1.44% of total revenue with 20.93% year-over-year growth. Products include RTX/Quadro workstation GPUs, vGPU software, and Omniverse Enterprise platform for 3D collaboration.

The target market includes architects, engineers, animators, and designers requiring certified hardware and enterprise support. Professional cards cost several thousand dollars each, and annual software licenses provide recurring revenue.

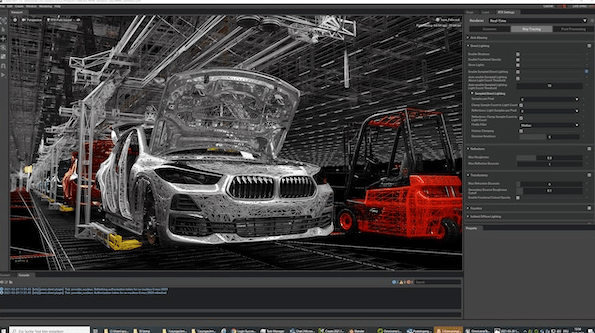

NVIDIA Omniverse enables users to work together on 3D models

Omniverse is strategically important beyond its current revenue contribution. The platform enables "digital twins" for industrial digitalization and provides a training ground for AI perception models. As AI integrates into content creation and design workflows, professional visualization and data center offerings increasingly converge.

Automotive and robotics

Automotive and robotics generated $1.69 billion in FY2025, accounting for 1.30% of total revenue with 55.27% year-over-year growth. Products include DRIVE platform SoCs (Orin, upcoming Thor) and Isaac platform for industrial robotics.

The value proposition centers on "Physical AI"—models trained in the cloud deployed at the edge for real-world navigation. Revenue comes from per-vehicle chip sales plus software licenses for Drive OS and mapping data.

Growth has been dramatic. Recent quarters saw year-over-year increases exceeding 100%. Key milestones like Drive Orin adoption by EV makers and the launch of new L4 self-driving platforms suggest this business could accelerate significantly.

Orin is positioned as an autonomous vehicle system-on-a-chip or “mega brain”

The segment remains small but represents a long-term bet. Design cycles in automotive are slow, but Nvidia is positioning to capture the future "AI on wheels" market. If self-driving cars take off, each vehicle could carry thousands of dollars of Nvidia assets through multiple chips and software subscriptions.

OEM & other

OEM & other revenue line brought in $389 million in FY2025, representing 0.30% of total revenue with 27.12% year-over-year growth. This catch-all segment includes Tegra chips for Nintendo Switch, entry-level GPUs for PC OEMs, and IP licensing.

The segment generated roughly $1 billion annually at Switch's peak but has declined as the console ages. A potential Switch 2 could provide a temporary boost if Nvidia supplies the chip, but the company places no strategic emphasis on this bucket.

Nvidia’s cost centers

Nvidia’s operating expenses reached $16.4 billion in FY2025, a 45% increase year-over-year. However, this represented only 12.58% of revenue, down from 18.60% the prior year. The company achieved an operating margin of 62% and net margin of 55%, software-like margins in a hardware business.

Research & development (R&D)

R&D spending hit $12.9 billion in FY2025, representing 9.9% of revenue. This was up from $8.7 billion in FY2024. R&D is Nvidia's largest operating expense.

The R&D investment funds the development of new GPU architectures, the CUDA software stack, AI frameworks, and networking protocols. Nvidia's aggressive annual product cadence requires continuous investment.

Nvidia’s R&D spend as a share of revenue has been in decline

A significant portion of Nvidia's 36,000 employees (up 22% year-over-year) work in engineering roles. Stock-based compensation for these engineers is substantial and accounted for in operating expenses.

ROI efficiency is notable. Despite lower absolute R&D spend than Intel's $16.5 billion, Nvidia generates superior returns due to focused GPU/AI investment. Intel's R&D represented over 21% of its revenue, while Nvidia's was only 10%, indicating far higher returns on research investment.

The company plans to continue increasing R&D in absolute terms, with roughly 30% year-over-year growth in operating expenses guided for FY2026. However, if revenues remain high, R&D as a percentage of revenue should stay in the 10-15% range.

Manufacturing and variable expenses

Cost of revenue reached $30.8 billion in FY2025, resulting in a gross margin around 75%. For a hardware company, this 25% cost-of-revenue ratio is remarkably low, reflecting premium pricing power.

Nvidia has committed $30.8 billion in obligations for inventory and production capacity, plus $5.1 billion in prepayments to secure TSMC priority. These commitments ensure the company can get priority at TSMC but represent massive fixed costs that must be paid regardless of short-term demand.

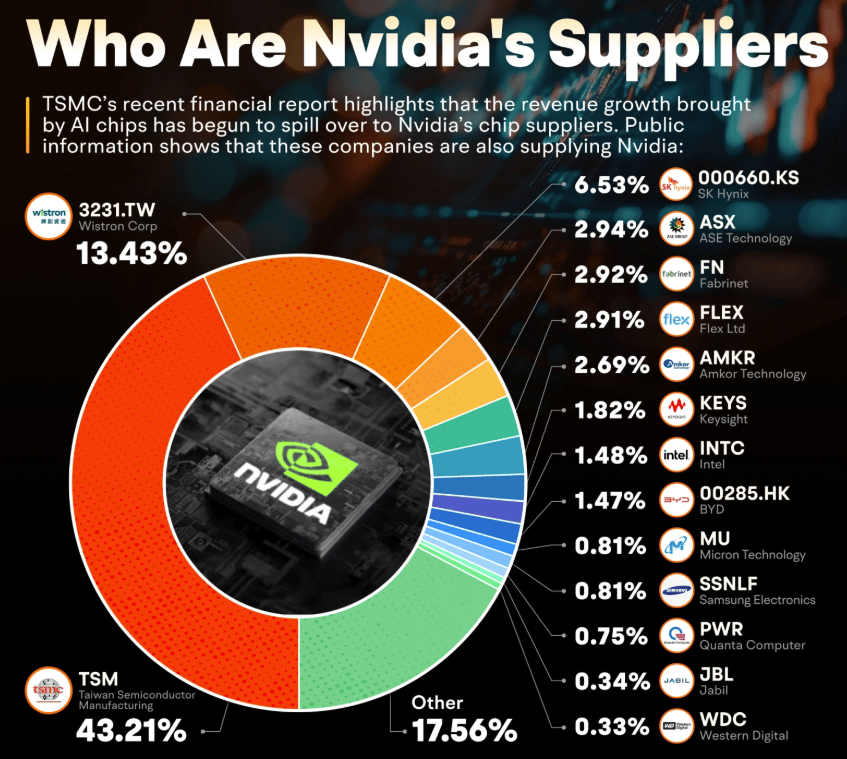

In 2024, TSMC accounted for around 43% of Nvidia’s expenditure on suppliers

The fabless model reduces fixed costs but introduces supply dependency risk. Nvidia mitigates this through multi-sourcing where possible and pre-paying for capacity. If demand were to suddenly drop, the company could be caught with unused supply commitments—an inventory risk that currently seems remote given insatiable demand.

Sales, general & administrative

SG&A expenses totaled $3.49 billion in FY2025, representing just 2.7% of revenue. This is among the lowest ratios in the tech industry. SG&A grew only 32% while revenue grew 114%, demonstrating exceptional operational leverage.

Minimal advertising is needed when demand exceeds supply. Sovereign states actively lobby for GPU allocation. Marketing spend focuses on the GTC developer conference, NVIDIA Inception startup program, and technical documentation.

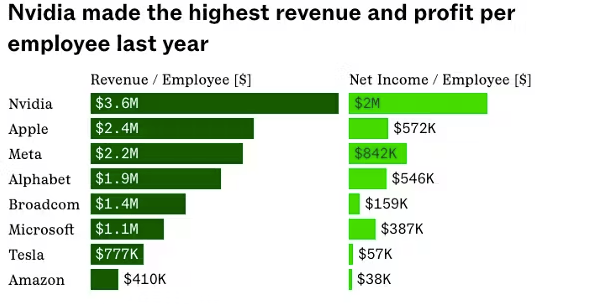

Nvidia has one of the highest revenue and net income per employee

The company maintains a lean structure. Revenue per employee exceeds $4.1 million. Sales efforts are targeted—a handful of deals with cloud providers and OEM partnerships drive massive revenue chunks, eliminating the need for a large salesforce.

One-off and strategic costs

Nvidia occasionally incurs significant extraordinary costs. The failed Arm acquisition resulted in a $1.25 billion breakup fee to SoftBank in 2022. The successful Mellanox integration, by contrast, involved a $6.9 billion acquisition in 2020 that has become a major revenue driver.

Regulatory compliance represents an ongoing concern. China anti-monopoly scrutiny regarding Mellanox may lead to future costs. New export controls require Nvidia to pay the US government 15% of Chinese sales revenue for export licenses—a structural change that impacts net margins.

The company also invests in speculative, long-term ventures. Examples include custom silicon development for AI workloads (TPUs), high-profile acquisitions like Fitbit and Mandiant, and restructuring efforts including $1.8 billion in office lease write-offs in 2023 and over $2 billion in severance packages.

Nvidia’s competitors

Nvidia holds 80-92% of the AI accelerator market and over 90% of the discrete GPU market. However, the competitive landscape is shifting from monopoly to complex oligopoly as customers seek alternatives.

AMD (Advanced Micro Devices)

AMD offers direct competition with Instinct MI300X/MI325X accelerators versus Nvidia's H100/Blackwell in data centers. The MI300X provides higher memory capacity—256GB HBM3E versus Nvidia's typical configurations—and cost-effective positioning.

Market share remains heavily tilted toward Nvidia. AMD holds 7-8% of the discrete GPU market and roughly 6% of the AI GPU market, compared to Nvidia's 94%.

The key weakness is ecosystem depth. ROCm, AMD's alternative to CUDA, has limited adoption despite being open-source. An entire generation of AI researchers built their careers on CUDA. The technical switching costs are prohibitively high.

AMD's strategic moves include acquiring Xilinx for FPGA integration and launching MI350/MI400 architectures. The company targets 20% AI market share by 2027. However, analysts suggest AMD remains at least a generation behind—one assessment noted "AMD will need another decade to try to pass Nvidia" in AI chips.

Intel

Intel offers Gaudi series accelerators positioned as 50% cheaper than H100, Arc discrete GPUs for consumers, and Ponte Vecchio for HPC. Market position is weak: less than 1% discrete GPU market share and under 5% in gaming graphics.

Strengths include deep enterprise relationships, owned fab capacity, and oneAPI open programming model. Weaknesses are more significant: late market entry, driver issues, organizational turmoil, and the legacy of the failed Larrabee project.

Recent developments include Nvidia replacing Intel in the Dow Jones Industrial Average. The competitive dynamics are shifting—Intel is entering the GPU market while Nvidia enters the CPU market with Grace.

Intel's greatest asset is incumbency in data centers with near-100% CPU share and manufacturing capability. The company could potentially leverage its own fabs to produce AI chips. However, late market entry and underpowered first-generation products limit near-term impact.

Cloud service providers (Internal ASICs)

The most significant long-term threat comes from major cloud providers building their own application-specific integrated circuits. Google's TPUs are used internally for Search and YouTube. TPU v7 "Ironwood" is designed to compete directly with Blackwell.

Amazon offers Trainium 3 for training and Inferentia for inference as cheaper alternatives on AWS. Microsoft and Meta are developing custom accelerators—Project Athena and MTIA—for internal workloads.

Every internal chip deployment reduces potential Nvidia sales. This represents a vertical integration threat. However, limitations exist: closed ecosystems, no standalone sales, and lack of general versatility compared to Nvidia GPUs.

Nvidia's defense centers on rapid innovation, complete platform solutions, and maintaining software ecosystem advantages. The company's full-stack approach—combining GPUs, CPUs, networking, and software—makes it difficult for single-purpose ASICs to match the flexibility and performance of Nvidia's integrated systems.

The future of Nvidia

Nvidia is executing a strategic transformation from "accelerated computing" provider to primary operator of "AI Superfactories." The company's vision extends beyond selling chips to becoming foundational infrastructure for the global AI economy.

The product roadmap maintains aggressive momentum. Rubin architecture began shipping in early 2026 with six new chips, including the Vera CPU. Nvidia maintains its annual innovation cadence to stay ahead of competitors who are still catching up to previous generations.

Revenue projections reflect extraordinary growth expectations. Q4 FY2026 guidance of $65 billion suggests continued acceleration. The potential $500 billion in Blackwell and Rubin sales by end of 2026 would represent nearly four times FY2025's total revenue in just two product lines.

Geopolitical challenges loom large. US export controls limit China access, which previously represented over 15% of revenue. A new 15% revenue-sharing requirement with the US government for Chinese sales adds more costs.

Market expansion targets include automotive projected to reach $5 billion annual run-rate, edge computing via Jetson and robotics platforms, and industrial AI through the IGX platform. Each represents a bet on AI moving from centralized data centers to distributed edge deployments.

The company that once made graphics cards for gamers now powers the infrastructure of artificial intelligence. Whether that position proves durable will depend on execution, innovation, and the ability to maintain its cultural paranoia of being "30 days away from going out of business" even as it generates hundreds of billions in revenue.